Basic System Design Concepts for Interview

Why is system design important?

For large-scale applications, system design provides a high-level understanding of the components and their relationships. It helps us to define architecture by breaking down the system into small components. On the other side, effective system design can impact the success of a software project by ensuring that the resulting system is well-structured, maintainable, and meets the needs of its users.

So tech professionals must understand various system design concepts to make informed decisions about various tradeoffs. On the other hand, system design is also one of the important concepts that tech companies ask about during interviews.

What is System Design?

System design deals with designing the structure of a software system to ensure that it meets desired functionality, performance, scalability, reliability, and other quality attributes. It is the process of defining the architecture, components, data, etc., for a system to satisfy functional and non-functional requirements.

When designing such systems, it is essential to have an excellent understanding of key system design terms like scalability (horizontal and vertical), caching, load balancing, data partitioning, various types of databases, network protocols, database sharding, etc. Similarly, one must consider various trade-offs like latency vs throughput, performance vs scalability, consistency vs availability, etc. Overall, system design is an open-ended discussion topic. That's why most tech firms prefer to include system design interview rounds.

Some key steps involved in designing a system:

- Requirement analysis: Understanding the functional and non-functional requirements.

- High-level design: Defining the overall structure, components and their interactions.

- Database design: Designing the structure of the database(s) like defining tables, relationships, indexing strategies, data access patterns, etc.

- API design: Designing APIs through which users or other systems interact with the system.

- Component design: Designing individual components, their responsibilities and interactions.

- Scalability and performance: Ensuring that the system can handle its expected workload efficiently and can scale to accommodate increasing workload demand.

- Reliability and fault tolerance: Designing the system to minimize the impact of failures and to recover gracefully from errors or faults.

- Security: protecting the system from unauthorised access, data breaches, and other security threats.

- Testing: Designing tests to verify that the system meets its requirements and performs as expected.

- Documentation: Documenting the design decisions, architecture and other relevant information to facilitate understanding, maintenance, and future development of the system.

Now let’s dive in to get familiar with the essential concepts used in system design.

Availability

Availability means: System should always remain up and return the response of a client request. In other words, whenever any user want to use the service, system must be available and satisfy user request.

Availability can be quantified by measuring the percentage of time system remain operational in a given time window. We usually define availability of a system in terms of number of 9s (as shown in the table below).

For designing a highly available system, we need to consider several factors like replication, load balancing, failover mechanisms, disaster recovery strategies, etc. Our goal should be to minimize downtime and ensure that system is always accessible and functioning as intended.

Throughput

In computer systems, throughput is one of the key metrics used to measure the performance and capacity of a system in handling tasks, transactions, or operations within a given time interval. Similarly in the context of a network, it represents the amount of data transferred successfully over a network within a certain timeframe.

High throughput is crucial when handling a large data or a high number of concurrent requests. One excellent method to achieve high throughput is to split the requests and distribute them across various machines.

Latency

Latency is another important metric related to the system performance. It is a measure of the time duration to produce the result. In other words, it is the time taken by a request to receive a response from a server.

- Latency can be affected by various factors like speed of processing, network congestion, distance between the source and destination, and the efficiency of the system's components. Lower latency means quick response times, higher system performance and a smooth user experience.

- Reducing latency is one of the key goals in optimizing performance in applications where real-time responsiveness is critical like online gaming, video streaming, financial trading, etc.

- Strategies for reducing latency: Optimizing algorithms, improving network infrastructure, using faster hardware components, minimizing unnecessary processing steps, etc.

Network Protocols

Almost every system has dependency on networks. It acts as a platform for communication between users and servers or different servers. On another side, protocols are the rules governing how servers or machines communicate over the network. Some of the common network protocols are HTTP, TCP/IP, etc.

Load Balancing

Load balancers are machines that balance the load among various servers. To scale the system, we can add more and more servers. So there must be a way to direct requests to these servers so that there is no heavy load on one server. In other words, load balancers are used to to prevent a single point of failure.

- Load balancers distribute traffic, prevent service from breakdown, and contribute to the system reliability.

- Load balancers act as traffic managers and help us to maintain system throughput and availability.

Proxies

Proxy is present between the client and server. When a client sends a request, it passes through the proxy and then reaches the server. Proxies are of two types: Forward Proxy and Reverse Proxy. The forward proxy acts as a mask for clients and hides the client’s identity from the server. Similarly, Reverse proxy acts as a mask for servers and hides server’s identity from the response.

Databases

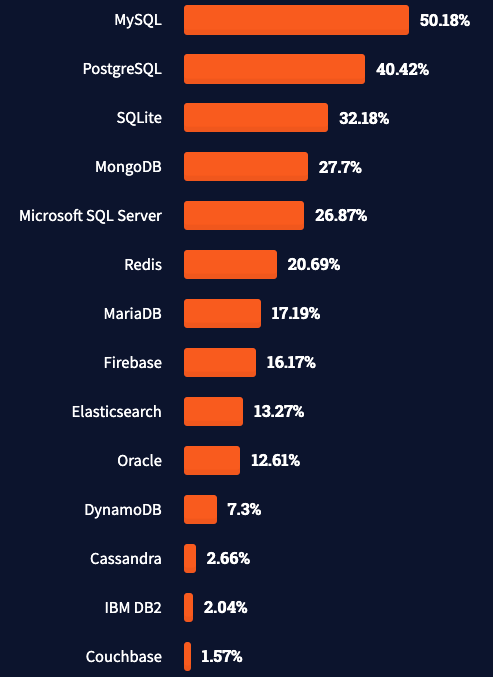

Systems are associated with data that needs to be stored for later retrieval. One way to classify databases is based on their structure, which can be either relational or non-relational. Relational databases, such as MySQL and PostgreSQL, enforce strict relationships among data and have a highly structured organization. Non-relational databases, such as Cassandra and Redis, have flexible structures and can store both structured and unstructured data. They are often used in systems that are highly distributed and require high speed

Database Partition is the way of dividing database into smaller chunks to increase performance of the system. It is another critical concept used to improve latency and throughput so that more and more requests can be served. Here is the list of some popular databases based on Stack Overflow Developer Survey 2021.

ACID vs BASE

Relational and non-relational databases ensure different types of compliance. Relational databases are associated with ACID compliance, while non-relational associated with BASE compliance.

ACID: Atomicity, Consistency, Isolation, Durability

ACID compliance ensures the relational nature of databases and ensures that transactions are executed in a controlled manner. A transaction is an interaction with the database.

- Atomicity: A group of operations are treated as a single unit of work. If any of these operations fail, entire transaction will fail. This ensures that all or nothing is achieved!

- Consistency: The database state changes do not corrupt the data and that transactions can move from one valid state to another.

- Isolation: Transactions are executed independently of each other, without affecting other transactions. This ensures concurrency in database operations.

- Durability: Data written to the database is persisted and will remain there, even in the event of a failure.

BASE: Basically Available Soft State Eventual Consistency

BASE compliance maintains integrity of NoSQL databases and ensures their proper functioning. It is a key factor in the scalability of NoSQL databases.

- Basically Available ensures that the system is always available.

- Soft state provides flexibility to the system and allows it to change over time for faster access.

- Eventual Consistency ensures that the data in the system takes some time to reach a consistent state and eventually becomes consistent.

SQL vs NoSQL

While designing any application, one needs to be clear about the type of storage according to the system requirements. If system is distributed in nature and scalability is essential, then NoSQL databases are the best choice to go with. No-SQL databases are also preferred when amount of data is huge.

SQL databases are favourable when data structure is more important. It is preferred when queries are complex and databases require fewer updates. However, there is always a trade-off when choosing between NoSQL vs SQL database. Sometimes according to business requirements, a Polyglot architecture comprising both SQL and NoSQL databases is used to ensure application performance.

Scalability in distributed systems

As services grow and number of requests increases, system can become slow and performance may be affected. Scaling is the best way to address this issue, which involves increasing the capacity of system to handle more requests. There are two main ways to scale: horizontal scaling and vertical scaling.

Horizontal scaling: Adding more servers to distribute requests. This allows system to handle more traffic by spreading it across multiple servers.

Vertical scaling: Increasing the capacity of a single machine by upgrading its resources like CPU, RAM, etc. This allows the system to handle more traffic by increasing the capacity of a single machine.

Caching

Caching helps to improve the performance of a system by reducing its latency. It involves storing frequently used data in a cache so that it can be accessed quickly, instead of querying the database. However, implementing a cache also adds complexity because it is important to maintain consistency between data in the cache and data in the main database.

Cache is relatively expensive, so the size of cache will be limited. To ensure the best performance, various cache eviction algorithms like LIFO, FIFO, LRU, and LFU, are used to manage the cache. These algorithms determine which data should be removed from cache when space is needed.

Consistent Hashing

Consistent hashing is a widely used concept in distributed systems because it offers flexibility in scaling. It is an improvement over traditional hashing, which are ineffective for handling requests over a network.

In consistent hashing, users and servers are located in a virtual circular ring structure called a hash ring. The ring is considered infinite and can accommodate any number of servers. Servers are assigned random locations based on a hash function. This concept allows for efficient distribution of requests or data among servers. It helps us achieve horizontal scaling, which increases throughput and reduces latency.

CAP Theorem

CAP Theorem is an essential concept for designing networked shared data systems. It states that a distributed database system can only provide two of these three properties: consistency, availability, and partition tolerance. We can make trade-offs between three properties based on the use cases of our dbms system.

- Consistency: Consistency means that everything should go on in a very well-coordinated manner and with proper synchronisation. It ensures that system returns the results such that any read operation should give the most recent write operation.

- Availability: Availability means that the system is always available whenever any request is made to it. In other words, whenever any client requests the server, system should remain available and give the response irrespective of the failure of one or more nodes.

- Partition Tolerance: Partition tolerance is necessary for any distributed system, so we always need to choose between availability and consistency. It corresponds to the condition that the system should work irrespective of any node failure or network failures.

Some other important concepts to explore

- Server-Sent Events

- Long Polling

- Client Server Architecture

- Web Sockets

- Load Balancing Algorithms

- Peer to Peer Networks

- Master Slave Replication

- Throttling and Rate Limiting

- How to Choose the Right Database?

- Leader Election in Distributed Systems

Conclusion

System design is an essential skill to have and is equally important from the interview point of view. One needs to be well aware of all the trade-offs while designing any system. In this blog, we tried to cover some basic concepts necessary for getting started.

We are looking forward to your feedback in the message below. Enjoy learning, Enjoy system design!

Share Your Insights

More from EnjoyAlgorithms

Self-paced Courses and Blogs

Coding Interview

OOP Concepts

Our Newsletter

Subscribe to get well designed content on data structure and algorithms, machine learning, system design, object orientd programming and math.

©2023 Code Algorithms Pvt. Ltd.

All rights reserved.