Youtube System Design

What is YouTube?

YouTube is one of the most popular video-streaming platforms that allows users to upload, watch, search, and share video-based content. It also provides features to like, dislike, add comments to videos, etc. So, YouTube is a huge system! However, Have you ever thought about how the YouTube system works and the underlying principles behind its design? Let’s move forward to understand this in detail.

Key requirement of the system

In this blog, we will focus on creating the small version of Youtube with the following features.

Functional Requirements

- Users should be able to upload videos.

- Users should be able to view videos.

- Users should be able to change video quality.

- The system should keep the count of likes, dislikes, comments, and views.

Non-Functional Requirements

- Video uploading should be fast and users should have a smooth streaming experience.

- The system should be highly available, scalable, and reliable. We can compromise with consistency to ensure high availability i.e. It should be fine if a user sees a slight lag in the video data.

- System should offer low latency and high throughput.

- System should be cost-efficient.

Capacity Estimation

- Suppose total users = 2 billion.

- Suppose daily active users = 400 million.

- Suppose the number of videos watched/day/user = 5.

- Total video views/day = 400 million * 5 = 2 billion views/day.

- Youtube would be view-heavy (read-heavy) system. Suppose view to upload ratio (read-to-write ratio) is 1:100, then total video upload/day = 2 billion/100 = 20 million videos upload/day.

- Suppose the average video size is 100 MB. Total storage needed/day = 20 million * 100 MB = 2000 TB/day = 2 PB/day. Here we ignore video compression and replication, which would change our estimates.

- If we use existing CDN cloud services to serve videos, then it would cost money for data transfer. Suppose we use Amazon’s CDN CloudFront, which costs $0.01 per GB of data transfer. So the total cost for video streaming/day = Total video views/day * avg video size in GB * $0.01 = 2 billion * 0.1 * 0.01 = 2 million $/day. As we observe, serving videos from the CDN would cost lots of money.

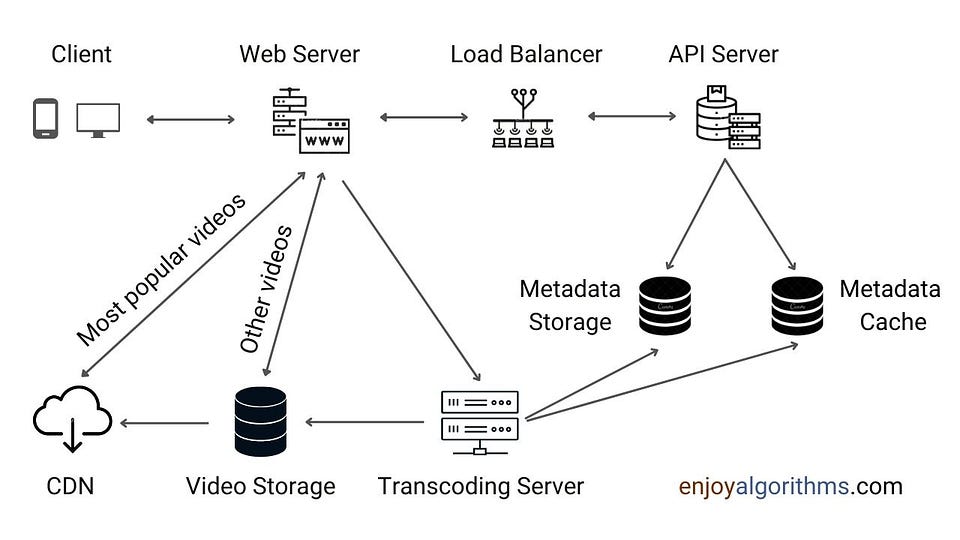

High-Level Design

Client: A computer, mobile phone, etc.

Video Storage: A BLOB storage system for storing transcoded videos. Binary Large Object (BLOB) is a collection of binary data stored as a single entity in a database management system.

Transcoding Server: We use this for video transcoding or encoding purposes, which converts a video into multiple formats and resolutions (144p, 240p, 360p, 480p, 720p, 1080p & 4K) to provide the best video streaming for different devices and bandwidth requirements.

API Server: We use this to handle all requests except video streaming. This includes feed recommendations, generating video upload URL, updating metadata database and cache, user signup, etc. This server talks to various databases to get all the data.

Web Server: Used for handling incoming requests from the client. Based on the type of request, it routes the request to the API server or transcoding server.

CDN: Encoded videos are also stored in CDN for fast streaming purposes. When we play a video, a popular video is streamed from the CDN.

Load Balancer: We use this to evenly distributes requests among API servers.

Metadata Storage: We use this to store all the video metadata like title, video URL, thumbnails, user information, view count, likes, comment, size, resolution, format, etc. It is sharded and replicated to meet performance and high availability requirements.

Metadata Cache: We use this to cache metadata, user info, and thumbnails for better read performance.

As YouTube is a heavily loaded service, it has various APIs to perform operations smoothly. We can design APIs for features like video uploading, video streaming, video search, adding comments, videos, recommendations, etc.

Video Uploading Process

We use the uploadVideo API for uploading the video content, which returns an HTTP response that demonstrates video is uploaded successfully or not.

string uploadVideo(string apiKey, stream videoData, string videoTitle, string videoDescription, string videoCategory, string videoTags[], string videoLanguage, string videoLocation)

- apiKey: An identification of the registered account.

- videoData: Uploaded video data.

- videoTitle: The title of the video.

- videoDescription: The description text of the video.

- videoCategory: Video category data like sports, education, etc.

- videoTags[]: A list of tags for the video.

- videoLanguage: The language of the content like English, Hindi, etc.

- videoLocation: The location where the video was recorded.

The video upload flow is divided into two processes running in parallel: 1) Uploading the video content and 2) Updating the video metadata.

1) Uploading the video content

First, videos are uploaded by the user, and then transcoding servers start the video encoding process. The encoding process converts video into multiple formats and resolutions set by the platform user. For increasing the throughput, we can parallelize the process by spreading this task across several machines. If a video will be popular, it can do another level of compression to ensure the same visual quality of the video at a much smaller size. Overall, video is processed by a batch job that runs several automated processes like generating thumbnails, metadata, video transcripts, encoding, etc.

Video encoding is possible in two ways: lossless and lossy. In lossless encoding, there is no data loss between the original format to a new format. In the lossy encoding, some data is dropped from the original video to reduce the size of the new format. We might have experienced this when uploading a high-resolution image on a social network. After the upload, the image doesn’t look as good as the original image.

After the completion of encoding process, two things get executed in parallel: 1) Storing the encoded videos in a transcoded database and CDN 2) Updating the metadata database and cache. Finally, API servers inform the client that the video is successfully uploaded and ready for streaming.

2) Updating the video metadata

While content is being uploaded to the video storage, the client, in parallel, sends a request to update the video metadata. This data includes title, video URL, thumbnails, user information, resolution, etc.

Video Streaming Process

We use the uploadVideo API for uploading the video content, which continuously sends the small pieces of the video media stream from the given offset.

stream viewVideo(string apiKey, string videoId, int videoOffset, string codec, string videoResolution)

- apiKey: An identification of the registered account.

- videoId: An identifier for the video.

- videoOffset: This is the time from the start of the video, which enables users to watch a video on any device from the same point where they left off.

- codec: Codec is the video compression standard to compress large video content into smaller sizes. It uses efficient video compression algorithms to facilitate this process. We send the client’s codec info in the API to support play/pause from multiple devices.

- videoResolution: We also send the client’s resolution details because different devices may have different resolutions.

Whenever a user sends a request to watch the video, the platform checks the viewer’s device type, screen size, processing capability, the network bandwidth. Based on that, it delivers the best video version from the nearest edge server in real-time. After this, our device immediately loads a little bit of data at a time and continuously receives video streams from CDN or Video Storage. Here are two critical observations:

- CDN ensures fast video delivery on a global scale.

- There will be two types of video in our system: 1) Popular videos that are accessed frequently 2) Unpopular videos which have few viewers.

Based on the above observation, we can make cost-effective decisions and implement a few optimizations. For example, We can only stream the most popular videos from CDN. Other videos from the high-capacity video storage. If a video will be popular later, it will move from video storage to CDN.

We need to use a standard streaming protocol to control data transfer during video streaming. Some popular streaming protocols are MPEG–DASH, Apple HLS, Microsoft Smooth Streaming, Adobe HTTP Dynamic Streaming (HDS), etc. The different streaming protocols support different video encodings and playback players, so we need to choose the proper streaming protocol. Suppose we use Dynamic Adaptive Streaming (MPEG–DASH)protocol for video streaming, which can help us in two ways:

- Make content available to the users at different bit rates and reduce the buffering as much as possible.

- Deliver quality content based on network bandwidth and device type of the end-user.

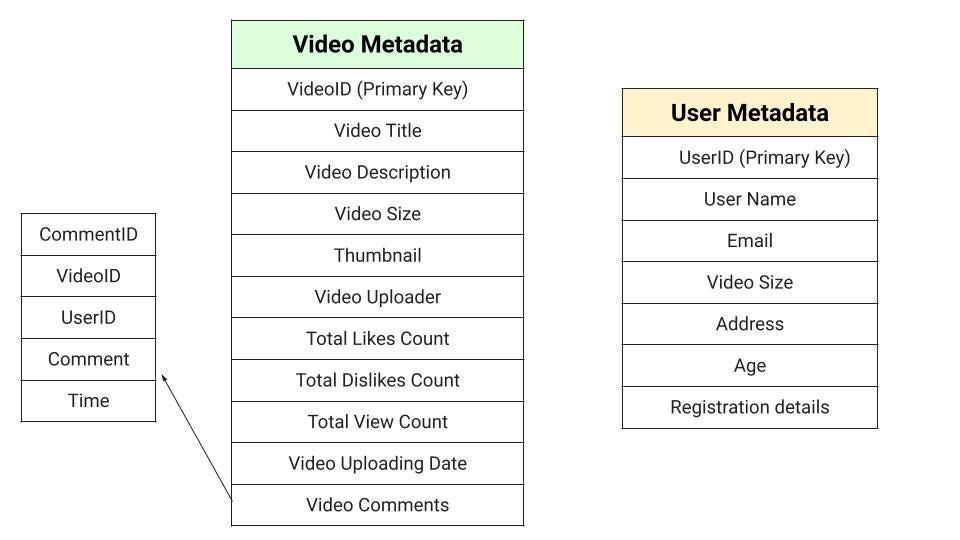

Metadata Schema

We can use MySQL to store metadata like user and video information. For this, we can maintain two separate tables: one table to store user metadata and another table to store video metadata.

Metadata Replication: Master-Slave Architecture

In this architecture, we need two data sources to scale out the application: one to handle the write query and the other one to handle the read query. Here writes request will go to the master first and then apply to all the slaves. Read requests will be routed to slave replicas parallelly to reduce the load on the master. This could help us to increase the read throughput.

Such design may cause staleness in data from the read replica. How? Suppose we performed a write by adding a new video, then its metadata would be first updated in the master. Now before this new data gets updated to the slave, a new read request came. At this point, slaves would not be able to see it and return stale data. This inconsistency may create a difference in view counts for a video between the master and the replica. But this can be okay if there is a slight inconsistency (for a short duration) in the view count.

Since we have a huge number of new videos uploaded every day and read operation is extremely high, so the master-slave architecture will suffer from replication lag. On another side, update operation causes cache misses, which go to disk. Now the critical question is: how can we improve the performance of the read write operations further?

Metadata Sharding

Sharding is one of the ways of scaling a relational database besides the replication. In this process, we distribute our data across multiple machines so that we can perform read/write operations efficiently. The idea is: Instead of a single master handling the write requests, we distribute write requests to various sharded machines to increase the write performance. We can also create separate replicas to improve redundancy.

Sharding can increase the system complexity and we need an abstract system to handle the scalability challenges. This led to the requirement of Vitess!

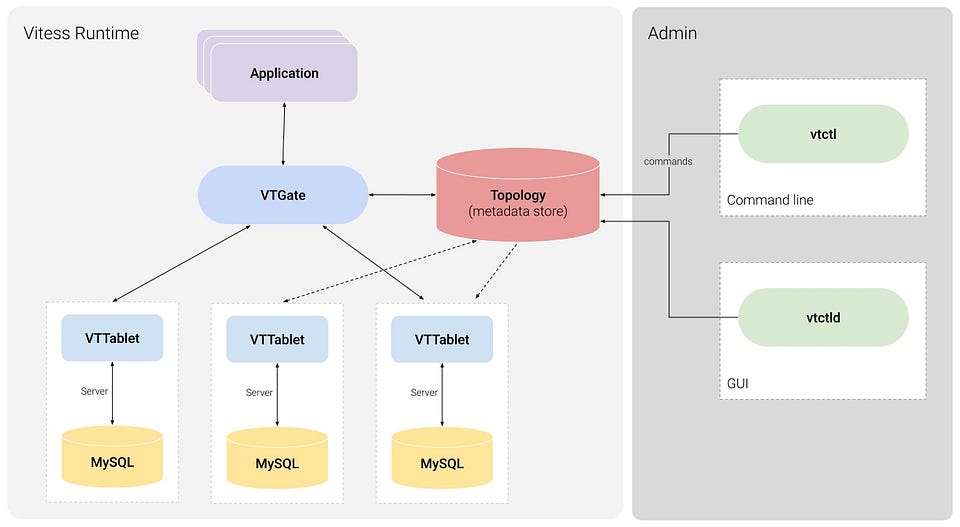

Vitess: A database clustering system for horizontal scaling of MySQL

Vitess is a database clustering system that runs on top of MySQL. It has several built-in features that allow us to scale horizontally similar to the NoSQL database.

Vitess Architecture. Source: https://vitess.io/

Here are some important features of Vitess:

Scalability: Its built-in sharding features let you grow database without adding sharding logic to application.

Performance: It automatically rewrites bad queries to improve database performance. It also uses caching mechanisms and prevents duplicate queries.

Manageability: It improves manageability by automatically handling failovers and backups functionalities.

Sharding management: MySQL doesn’t natively support sharding, but we will need it as your database grows. It helps us to enable live resharding with minimal read-only downtime.

Caching

For building such a scalable system, we need to use different caching strategies. We can use distributed cache like Redis or Memcache to store the metadata. To make the caching service efficiently perform all its operations, we can use LRU (Least Recently Used) cache algorithm. We can also use CDN as a video content cache. CDN is useful in fetching media content directly from AWS S3. If the service is on AWS, it is convenient to use Cloudfront as a content cache and elastic cache service for metadata cache.

Disaster Management Strategy

We need to avoid data loss or service unavailability due to power outages, machine failures, natural disasters like earthquakes, etc. For this, we can back up data on data centers located in different geographical locations. The nearest data center serves the user request, which could help to fetch data faster and reduce the system latency.

Conclusion

YouTube is a very complex and highly scalable service to design. In this blog, we have covered only the fundamental concepts necessary for building such Video Sharing services. However, we have limited our discussion to the system’s generic and critical requirements, but this could be expanded further by including the various other functionalities like personalized recommendations, etc.

References and Additional Reading

- 7 Years Of YouTube Scalability Lessons In 30 Minutes

- YouTube Architecture

- YouTube Scalability Talk

- Vitess Architecture

- How YouTube Works?

- A Netflix Guide to Microservices

- YouTube Search & Discovery

- Making high-quality video efficient

- Machine learning for video transcoding

Enjoy learning, Enjoy system design!