PUBG Cheater Detection: Machine Learning Project

Introduction

The gaming industry is growing tremendously, and because of this, many tech-giant companies are heavily investing in this domain. Google is developing the game development kit and the cloud platforms to build/play games online. Similarly, Facebook and Microsoft are investing heavily in VR games. Hence we can anticipate how big the gaming industry is. With the recent user engagement, it is observed that the number of people who love competitive games is highly significant.

But this engagement highly depends upon the fundamental question: “How fair is it for all the players?” If a competitive game is biased, genuine players will get disappointed and avoid playing. Cheaters try to modify game controls to win competitive matches, which is unfair to many. Hence companies take many measures to make the game as fair as possible. One such step is to detect the cheaters or hackers and eliminate them from the competition.

Likewise all other industries, the gaming industry collects various players’ data to analyze their performance. Let’s try to understand this with an example of PUBG, Player Unknown’s Battlegrounds, one of the most famous and most played online games. On average, PUBG has 30 million daily active users. It collects data from players and categorizes them into different segments. But it is impossible to categorize 30 million players manually. That’s where machine learning comes into the picture. ML allows us to analyze tons of data, draw meaningful results and categorize cheaters into separate categories.

Key Takeaways from this article

- How does PUBG use Machine Learning to build a hack-detection system?

- Five Steps to implement cheater detection model using Random Forest algorithm.

- How to evaluate the performance using various evaluation metrics?

- Other use-cases of ML in the gaming industry.

- Possible interview questions on this project.

Let’s start without any further delay.

PUBG'S Hack Detection System

In October 2021, PUBG permanently banned 2.5 million accounts from Battlegrounds Mobile India (BGMI) and temporarily banned 706,319 accounts. According to Tencent’s ( gaming engine of PUBG) report, 46 percent of prohibited accounts were caught using auto-aim and x-ray vision hacks, 18 percent were using speed hacks, 20 percent were modifying their area damage and the character model, and 16 percent were banned for some other reason.

What is PUBG doing about cheaters?

Machine learning is the answer. The security team of PUBG constantly monitors tons of data, with over 10 million reports on average daily. They identify and remove hackers by scanning for suspect software and modifying game data. They try to find impossible events happening during the match, for example, someone taking a shot from a considerable distance and still being able to connect and deal damage to the enemy, players killing enemies without moving a single step, and many more.

PUBG also provides an option of reporting players in a match. You have to click on the report button, and a new window pops up where you can select the type of misbehavior or manually write a message. The team will review your report and provide a notification if the player is banned or not. This is not the end PUBG uses many more techniques to provide fair gameplay to its users. After this section, we will start building our model by defining our problem statement and classifying our machine-learning model on the five bases we have discussed in this blog.

Defining the Problem Statement

Our problem statement is that we want to detect whether the player is genuine or false in PUBG. We have the labeled data, so we will use a supervised learning approach. This is a binary classification problem as we have two classes, cheater and non-cheater. We will solve this problem using a classical machine learning algorithm which we will discuss later in this blog. It will be a non-parametric model with non-probabilistic outputs because we are not dealing with probability distributions. The last two classifications depend on the algorithm we choose to solve this problem. Now let’s proceed ahead to implement the PUBG cheater detection model.

Steps to implement the cheater detector for PUBG

Step 1: Data Collection

There are two famous PUBG datasets available on Kaggle:

We will use the pubg placement prediction dataset to build our model. We need to download the trainV2.csv file in zip format, unzip it and read it using pandas.readcsv().

This dataset is in the shape of (4446966, 29), which means that the 29 different attributes are collected from the 4446966 instances of various players in different matches. Let’s first understand these 29 features in detail.

Feature description

- DBNOs: Number of enemy players knocked.

- assists: Assistance a player, gets from his teammates to kill an enemy.

- boosts: Number of boost items used in a match.

- damageDealt: Total damage dealt during a game.

- headshotKills: Number of headshot kills.

- heals: Number of healing items used.

- Id: Player’s Id.

- killPlace: Ranking based on the number of enemy players killed.

- killPoints: Points a player gets by killing an enemy.

- killStreaks: Number of continuous kills in a short amount of time.

- kills: Number of enemy players killed.

- longestKill: Longest distance between player and enemy player killed at the time of death.

- matchDuration: Duration of the match in seconds.

- matchId: ID to identify a game.

- matchType: Type of matches are “solo”, “duo”, “squad”, “solo-fpp”, “duo-fpp”, and “squad-fpp”.

- rankPoints: Elo-like ranking of player.

- revives: Number of times this player revived teammates.

- rideDistance: Total distance traveled in vehicles measured in meters.

- roadKills: Number of kills while the player is in a vehicle.

- swimDistance: Total distance traveled by swimming measured in meters.

- teamKills: Number of times a player killed his teammate.

- vehicleDestroys: Number of vehicles destroyed.

- walkDistance: Total distance traveled on foot measured in meters.

- weaponsAcquired: Number of weapons picked up.

- winPoints: Win-based external ranking of player.

- groupId: ID to identify a team or group in a match. This will keep changing for the same team or group in the next game.

- numGroups: Number of groups in a match.

- maxPlace: Worst placement we have data for in the game. This may not match with numGroups, as sometimes the data skips over placements.

- winPlacePerc: 1 corresponds to 1st place, and 0 corresponds to last place in the match.

Once we get insight into all these features, it is essential to understand how they help us identify the cheaters.

Step 2: Separating potential cheaters

Using the features described above, we need to list some impossible events and spot the players of these events. After categorizing these impossible events, we will make a separate cheaters_data to contain information about the potential cheaters from our actual data and remove them from the existing dataset.

Hunt for Impossible Events

Killing an enemy without taking a single step

If you have played any Battle Royal game, you can easily relate that killing an enemy without taking a single step is nearly impossible. Players having ‘kills ‘> 0 with ‘toatalDistance’ = 0 can be considered an impossible event, and players involved with this event can be potential cheaters.

pubg_data['totalDistance'] = pubg_data['rideDistance'] + pubg_data['walkDistance'] + pubg_data['swimDistance']

pubg_data['potential cheaters']=((pubg_data['kills'] > 0) & (pubg_data['totalDistance'] == 0))

cheaters_data=pubg_data[pubg_data['potential cheaters']==True]

pubg_data.drop(pubg_data[pubg_data['potential cheaters']==True].index,inplace=True)Kill Distribution

Most of the players fall in the 0–15 kills range. It is rarely possible that someone might break the world record of 59 kills in a match. And if someone does so, it is better to put them in the cheaters’ category.

plt.figure(figsize=(12,4))

sns.countplot(data=pubg_data, x=pubg_data['kills']).set_title('Kills')

plt.show()We might be thinking: What if someone breaks the world record? Will PUBG consider him a cheater? Let’s try to relate this to real-life; we don’t judge people on just one action, right? We consider his past actions and behavior. Similarly, before banning their accounts, PUBG also considers players’ past actions, report history, in-game behavior, and many more.

pubg_data['potential cheaters']=((pubg_data['kills'] > 59))

cheaters_data=pd.concat([cheaters_data,pubg_data[pubg_data['potential cheaters']==True]])

pubg_data.drop(pubg_data[pubg_data['potential cheaters']==True].index,inplace=True)Longest Kill

Killing an enemy from a distance of more than 1000 m sounds insane until or unless you are an ultra pro max player or you get on some vehicle and run away. Both chances are less, so we consider these players as potential cheaters.

plt.figure(figsize=(12,4))

sns.distplot(pubg_data['longestKill'],kde=True,color='orange')

plt.show()pubg_data['potential cheaters']=((pubg_data['longestKill'] >= 1000))

cheaters_data=pd.concat([cheaters_data,pubg_data[pubg_data['potential cheaters']==True]])

pubg_data.drop(pubg_data[pubg_data['potential cheaters']==True].index,inplace=True)Weapons acquired by players in a match.

In a match, the player acquires 0–10 weapons on average. Players acquiring more than 50 weapons are for sure cheaters or hackers. It’s better to drop them off. And also, if a player kills enemies without using weapons, there is something fishy about him. Please put them in the potential cheater category.

plt.figure(figsize=(12,4))

sns.distplot(pubg_data['weaponsAcquired'], bins=10)

plt.show()pubg_data['potential cheaters']=((pubg_data['weaponsAcquired'] >= 50))

cheaters_data=pd.concat([cheaters_data,pubg_data[pubg_data['potential cheaters']==True]])

pubg_data.drop(pubg_data[pubg_data['potential cheaters']==True].index,inplace=True)

pubg_data['potential cheaters']=((pubg_data['weaponsAcquired'] == 0) & (pubg_data['kills']>10))

cheaters_data=pd.concat([cheaters_data,pubg_data[pubg_data['potential cheaters']==True]])

pubg_data.drop(pubg_data[pubg_data['potential cheaters']==True].index,inplace=True)Use of healing kits over a match

Most players use less than ten healing items in a match, but you can see some players use more than 30 heals. Isn’t it fishy? We shouldn’t take risks and mark them as potential cheaters.

plt.figure(figsize=(12,4))

sns.distplot(pubg_data['heals'], bins=10)

plt.show()pubg_data['potential cheaters']=((pubg_data['heals'] >=30))

cheaters_data=pd.concat([cheaters_data,pubg_data[pubg_data['potential cheaters']==True]])

pubg_data.drop(pubg_data[pubg_data['potential cheaters']==True].index,inplace=True)We have identified the impossible events and made separate data out of them. But we still have 29 features, which is vast. Models built using all these features will be heavy and impractical. So let’s drop some lesser important features.

Step 3: Feature Extraction, Selection, and Engineering

In this section, we will apply different feature selection techniques and try to select the best set of features for our model. One such method is to verify whether two attributes are correlated. We ensure that our features are not highly correlated, and to check that, we can visualize the cross-correlation matrix.

Correlation matrix

A correlation matrix is a table depicting correlation coefficients of all possible pairs of attributes present in the dataset. Visualizing the dependencies of features on each other is a very intuitive method.

plt.figure(figsize=[25,12])

sns.heatmap(pubg_data.corr(),annot = True,cmap = "BuPu")We can see that “winPoints & killPoints” and “kills & damageDealt” are strong-positively correlated. If killpoints increase two-fold, winpoint will also increase two-fold; hence, we can drop one. This method of feature selection is called filtering. You can read more on this blog.

Feature Engineering

We can use totalDistance to store “swimDistance+rideDistance+walkDistance” for spotting cheaters, and we can drop the three distances from the final feature set. We are eliminating categorical features also as they are of no use in predicting the output.

pubg_data.drop(['damageDealt','winPoints','rideDistance','swimDistance','walkDistance','winPlacePerc'],axis=1)

pubg_data=pubg_data.drop(['Id','groupId','matchId','matchType'],axis=1)Our feature engineering is done, and it’s time to decide which machine learning algorithm we should use to build our model. As we already have stated, it is a classification problem statement; some famous classification algorithms are logistic regression, SVM, KNN, and Random Forest.

In this article, we will be using the Random Forest algorithm to train our model. This algorithm needs minimal data cleaning efforts and gives heroic results by measuring the relative importance of each feature on prediction.

Why Random Forest?

- Minimal data cleaning efforts are required.

- Robust to outliers.

- Reduces risk of overfitting (by using the bagging method)

- Measures the relative importance of each feature on the prediction.

Step 4: Random Forests Implementation

Random Forest is a supervised learning algorithm that uses an ensemble learning approach for regression and classification. It builds multiple decision trees and merges their predictions for more accurate predictions. This process takes place in three steps :

Bootstrapping

If we have ’n’ data samples and ‘m’ decision trees, this method will randomly assign ’n’ samples to ‘m’ trees with replacements after each assignment in an iterative manner.

Parallel Training

Each decision tree is trained independently and in parallel.

Aggregation

In aggregation, we use the concept of majority voting in classification. An average of all the outputs predicted by individual decision trees is taken in regression for a more accurate and stable prediction.

Before building the model, we need to split the dataset into training and testing samples. We will use the test set to evaluate the model’s performance later.

target = pubg_data['potential cheaters']

features = pubg_data.drop('potential cheaters',axis=1)

x_train,x_test,y_train,y_test = train_test_split(features,target,train_size=0.3,random_state=0)Model Building

Let’s use Scikit-learn random forest for building the model on our training data.

model = RandomForestClassifier(n_estimators=40, min_samples_leaf=3, max_features='sqrt')

model.fit(x_train,y_train)

y_pred = model.predict(x_test)

y_predtrain = model.predict(x_train)

print("test data accuracy: ", accuracy_score(y_test, y_pred))

print("test data precision score: ", precision_score(y_test, y_pred))

print("test data recall score: ", recall_score(y_test, y_pred))

print("test data f1 score: ", f1_score(y_test, y_pred))

print("test data area under curve (auc): ", roc_auc_score(y_test, y_pred))Step 5: Performance Evaluation

Now our algorithm is ready, so we must check the performance of our model. There are various evaluation metrics for the classification models. If you need to become more familiar with them, please look here. We will evaluate our model on each of them because some researchers feel that more than one metric for evaluation is needed.

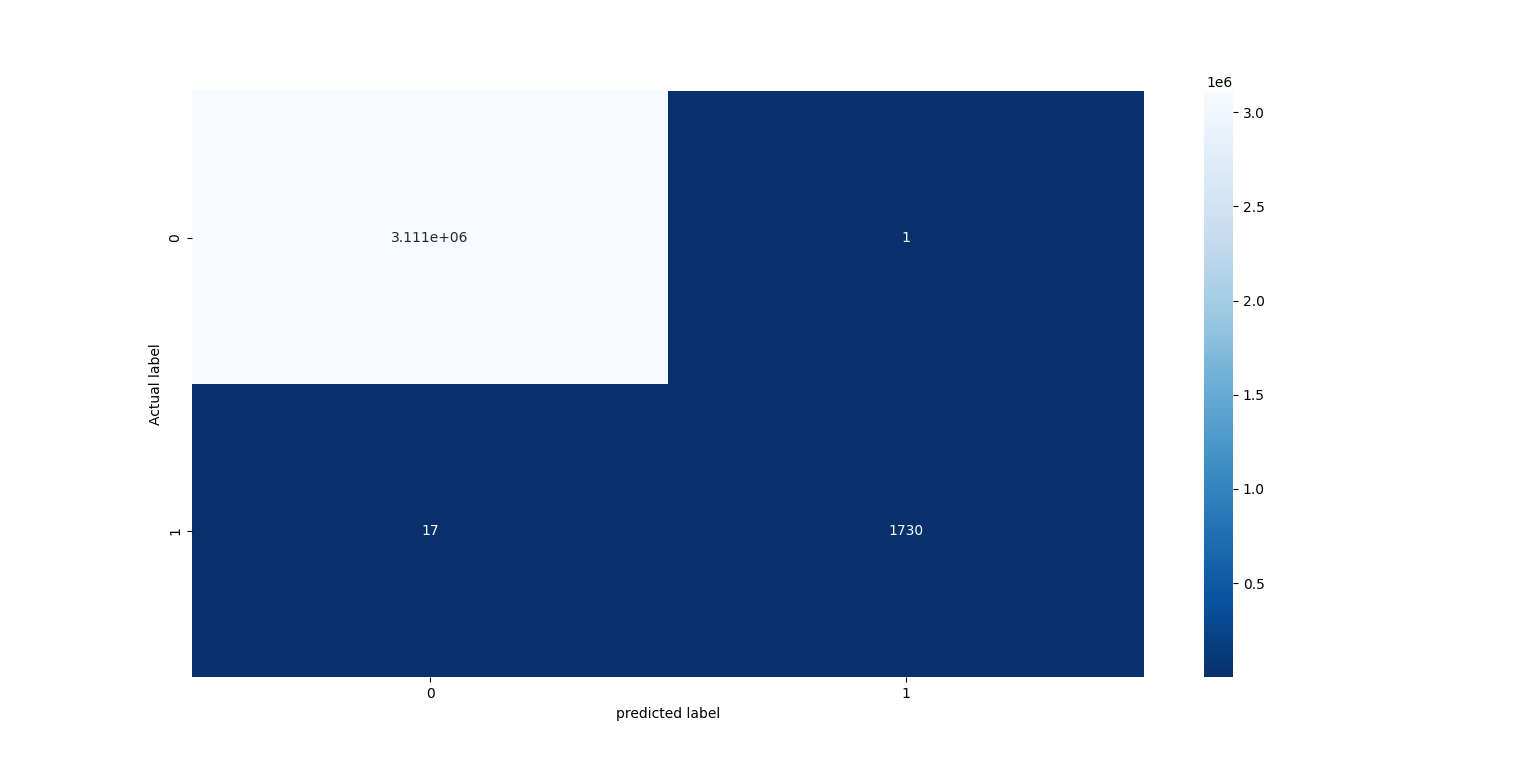

Confusion Matrix

The confusion matrix is the best way to represent the accuracy of a classification model. It is the most used evaluation metric for classification problems.

test data accuracy: 0.9999942175660065

test data precision score: 0.9994222992489891

test data recall score: 0.9902690326273612

test data f1 score: 0.9948246118458884

test data area under curve (auc): 0.995134355600319Awesome! We successfully achieved 99.99% accuracy over our test dataset. That’s how PUBG’S security team uses Machine Learning to identify and ban cheaters to improve the quality and make the gameplay fairer for all.

Use-cases of Machine Learning In Gaming

PUBG bot detection

In online games use of NPCs is widespread. These are non-player characters or computer-controlled players that offer users more exciting gameplay. PUBG categorizes them as bots, and we can use the same approach to build a machine learning model to detect them.

Advancement of Bots

Bots are nothing but machine algorithms interacting with the virtual environment. We can increase their advancement by allowing them to learn from their mistakes or historical data. They will become more advanced via this method, and dependency on the available online players will reduce.

Possible Interview Questions

If you mention this project in your resume, these are some possible interview questions that can be asked in Machine Learning interviews:

- What are the attributes in the dataset?

- How did you identify the impossible events?

- What data analysis techniques have you used?

- Did you use any feature engineering or selection techniques? If yes, please describe it in detail.

- What is ensemble learning? Or Why Random Forests?

- What’s the accuracy or precision of your model?

- Can you name some other use cases of ML in the gaming industry?

Conclusion

Machine learning has great potential to change the gaming industry. It can enhance the user experience by making games less complex, introducing NPCs, and making games more realistic. Microsoft is working with Nvidia to make games more realistic by quickly rendering objects without pixel losses. Cheating detection is a widespread problem that every game faces in some manner, and we have discussed the solution in this blog.

Next Blog: Introduction to XG-Boost Algorithm in Machine Learning

Enjoy learning, Enjoy algorithms!

Share Your Insights

More from EnjoyAlgorithms

Self-paced Courses and Blogs

Coding Interview

OOP Concepts

Our Newsletter

Subscribe to get well designed content on data structure and algorithms, machine learning, system design, object orientd programming and math.

©2023 Code Algorithms Pvt. Ltd.

All rights reserved.