Throttling and Rate Limiting

What is Rate Limiting?

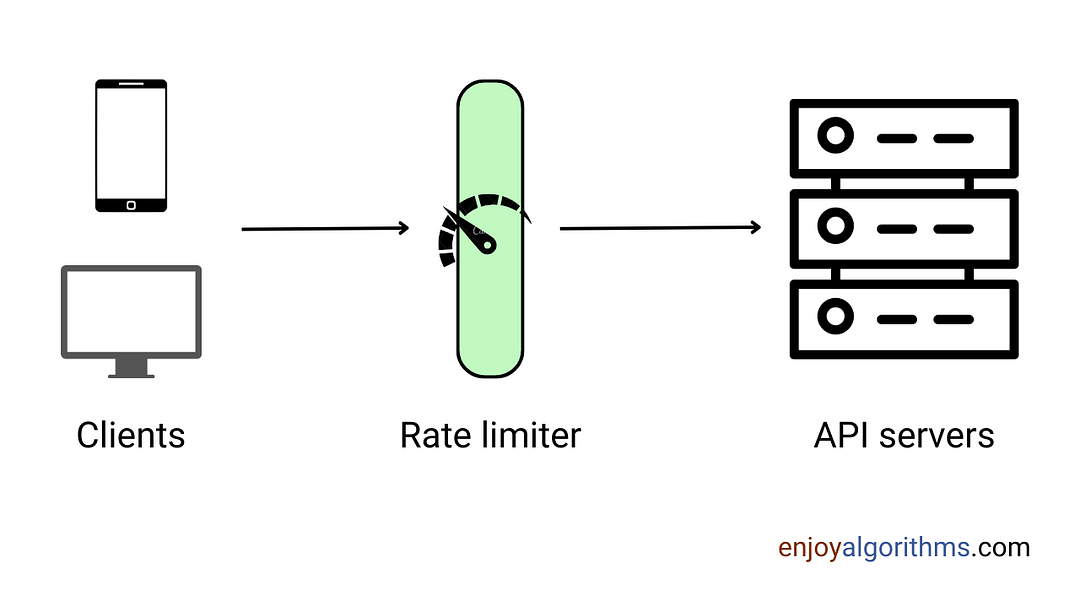

In distributed systems, rate limiting is one of the critical techniques to control the amount of traffic going through a system within a specific time period. The goal here is to prevent overuse of resources by limiting the rate at which events can occur. In other words, rate limiting is one of the protective mechanisms to restrict excessive use of services and preserve their availability.

For example, a website can use rate limiting to prevent someone from repeatedly attempting to log in to an account with incorrect credentials. If a user tries to log in too frequently within a certain time window, the website can either block their access or slow down their requests. This will help to prevent malicious actors from overburdening the system and initiating attacks like DoS (Denial of Service).

How rate limiting works?

A rate limiter tracks the IP addresses of incoming requests and the time between them. If a single IP address makes too many requests within a specified timeframe, the rate limiter will throttle the IP address and not fulfil its requests for a certain period of time. In this way, a rate limiter acts like a virtual traffic cop, telling users who are making requests rapidly to slow down. This is similar to a traffic officer pulling over a driver for speeding.

Here is how it generally works:

- When a client sends a request to a server, the server identifies the client based on various factors like IP address, user account, API key, etc.

- Now server will evaluate the request against predefined rate limits i.e. number of requests allowed within a certain time period (e.g., 100 requests per minute).

- Based on the evaluation, the server will decide whether to allow, throttle, or reject the request. If the request falls within the allowed limits, it is processed as usual. If the request exceeds the limits, the server may throttle the request (delay processing) or reject it.

- After the end of the specified time period (e.g., every minute), the rate limits reset so that the client can make a new set of requests within the limits.

What is API rate limiting?

Granting unlimited access to an API can be risky because anyone can use the API as much as they want. So rate limiting can protect APIs from being overwhelmed by too many requests.

An API that uses rate limiting can throttle or temporarily block any client that tries to make too many API calls. Here rate limiter sets a threshold on the number of requests allowed per API key within a specified timeframe (requests per minute or requests per hour). When a client exceeds the threshold, the API can respond with an error code or delay processing the request. Note: One can implement API rate limiting at various points like the API gateway, load balancer, or within the application code.

Benefits of rate-limiting

- Avoid resource depletion due to a Denial of Service (DoS) attack: By limiting the number of excess calls, a rate limiter can prevent DoS attacks. For example, Twitter restricts users to 300 tweets every three hours, and Google Docs APIs have a default limit of 300 per user every 60 seconds for read queries.

- Reduce expenses: Limiting request counts can help to reduce the number of servers needed and allocate more resources to high-priority APIs. This is important for companies that use paid third-party APIs, which may be charged on a per-call basis.

- Ensure that servers are not overloaded: Rate limiter can reduce server load by filtering out extra requests produced by bots or users.

Methods to implement API Rate-Limiting

Request Queues

There are many request queue libraries that make it easy to implement rate limiting in different development environments. These libraries often come with pre-written code and can be easily accessible. For example, there are request rate limiter libraries that limit number of requests per second to a specific number and queue any additional requests. This can be an easy-to-use solution for API development.

Throttling

Throttling is another common method for implementing rate limiting. It involves establishing a temporary state in which each request is evaluated by the API. If throttle is triggered, a user may be disconnected or have their bandwidth reduced. Throttling can be done at the application, API, or user level.

There are many products available that make it easy to implement throttling like Hybrid Data Pipeline from Progress, which provides throttled API access to various databases and services including IBM DB2, Oracle, SQL Server, MySQL, PostgreSQL, SAP Sybase, Hadoop Hive, Salesforce, and Google Analytics.

Another technique to make scalable rate-limited APIs is to use rate-limiting algorithms.

Rate-limiting Algorithms

Fixed window technique

The fixed window technique uses an incremental counter to track the number of incoming requests over a fixed time window. If number of requests exceeds the specified limit during this time period, any additional requests will be discarded.

For example, a server can implement an algorithm to allow 300 API requests per minute. This means that the server will not serve more than 300 requests between 8:00 and 8:01. Here window will reset at 8:01 so that another 300 requests will served in the next time window [8:01, 8:02].

Leaky bucket technique

The leaky bucket technique is easy to implement and efficient in terms of memory usage. It converts incoming requests into a First In First Out (FIFO) queue so that it can process requests at a consistent rate.

So leaky bucket algorithms don't rely on specified timeframes. Instead, they focus on the fixed length of request queues, regardless of time. The server will service requests on a FIFO basis, with new requests joining the back of the queue. If a new request arrives when the queue is full, it will be dropped.

This idea will help in smoothing out traffic spikes because the server receives API requests constantly (requests are forwarded to the server one by one) and there are no sudden bursts of requests. However, it is not perfect because the queue is static and there is a higher chance of starvation.

Sliding window technique

Sliding-window algorithm is time-based and similar to the fixed-window algorithm, but they differ in the starting point of each time window. Here the timeframe starts when a user makes a new request rather than at a predetermined time. For example, if the first request arrives at 7:00:18 am (and the rate limit is 200 per minute), the server will allow up to 200 requests until 7:01:18.

This idea mitigates the starvation issue of the leaky bucket idea by starting a new time window whenever a request is made. So this is suitable for processing large requests while being lightweight and fast to run.

Thanks to Navtosh for his contribution in creating the first version of this content. If you have any queries or feedback, please write us at contact@enjoyalgorithms.com. Enjoy learning, Enjoy system design!